Next: Gustafson-Barsis's Law

Up: Skeptic Postulates For Parallel

Previous: Grosch's Law

Contents

Amdahl's Law

- We defined the speedup factor of a parallel system as the ratio between the time taken by a single processor to solve a given problem instance to the time taken by a parallel system consisting of

processors to solve the same problem instance (see Eqn. 3.4).

processors to solve the same problem instance (see Eqn. 3.4).

- Similar to Grosch's law, Amdahl's law made it so pessimistic to build parallel computer

systems due to the intrinsic limit set on the performance improvement (speed) regardless of the number of processors used.

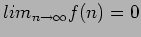

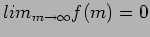

- An interesting observation to make here is that according to Amdahl's law,

is fixed and does not scale with the problem size,

is fixed and does not scale with the problem size,  . However, it has been practically observed that some real parallel algorithms have a fraction that is a function of

. However, it has been practically observed that some real parallel algorithms have a fraction that is a function of  . Let us assume that

. Let us assume that  is a function of

is a function of  such that

such that

|

(3.7) |

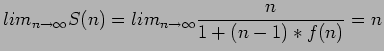

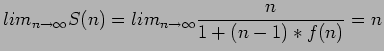

- This is clearly in contradiction to Amdahl's law. It is therefore possible to achieve a

linear speedup factor for large-sized problems, given that

, a condition that has been practically observed.

, a condition that has been practically observed.

- For example, researchers at the Sandia National Laboratories have shown that using a 1024-processor hypercube multiprocessor system for a number of engineering problems, a linear speedup factor can be achieved.

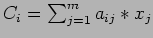

- Consider, for example, the well-known engineering problem of multiplying a large square matrix

by a vector

by a vector  to obtain a vector, that is,

to obtain a vector, that is,  . Assume further that the solution of such a problem is performed on a binary tree architecture consisting of

. Assume further that the solution of such a problem is performed on a binary tree architecture consisting of  nodes (processors).

nodes (processors).

- Initially, the root node stores the vector

and the matrix

and the matrix

is distributed row-wise among the

is distributed row-wise among the  processors such that the maximum number of rows in any processor is

processors such that the maximum number of rows in any processor is  .

.

- A simple algorithm to perform such computation consists of the following three steps:

- The root node sends the vector

to all processors in

to all processors in

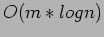

- All processors perform the product

in

in

- All processors send their

values to the root node in

values to the root node in

.

.

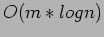

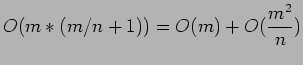

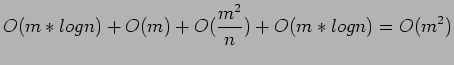

- According to the above algorithm, the amount of computation needed is

- The indivisible part of the computation is equal to

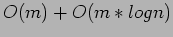

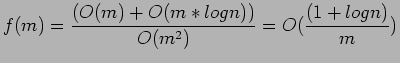

- Therefore, the fraction of computation that is indivisible

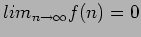

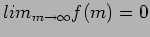

- Notice that

. Hence, contrary to Amdahl's law, a linear speedup can be achieved for such a large-sized problem.

. Hence, contrary to Amdahl's law, a linear speedup can be achieved for such a large-sized problem.

- It should be noted that in presenting the above scenario for solving the matrix vector multiplication problem, we have assumed that the memory size of each processor is large enough to store the maximum number of rows expected.

- This assumption amounts to us saying that with

processors, the memory is

processors, the memory is  times larger. Naturally, this argument is more applicable to message passing parallel architectures than it is to shared memory ones.

times larger. Naturally, this argument is more applicable to message passing parallel architectures than it is to shared memory ones.

Next: Gustafson-Barsis's Law

Up: Skeptic Postulates For Parallel

Previous: Grosch's Law

Contents

Cem Ozdogan

2006-12-27

and the matrix

and the matrix

is distributed row-wise among the

is distributed row-wise among the  processors such that the maximum number of rows in any processor is

processors such that the maximum number of rows in any processor is  .

.

to all processors in

to all processors in

in

in

values to the root node in

values to the root node in

.

.

. Hence, contrary to Amdahl's law, a linear speedup can be achieved for such a large-sized problem.

. Hence, contrary to Amdahl's law, a linear speedup can be achieved for such a large-sized problem.